Buy Figma Version

Easily personalize every element of template with Figma and then transfer to Framer using plugin.

HANDBOOK

How do I use Generative AI safely at my company?

In the modern business landscape, Generative AI has emerged as a revolutionary tool that enables companies to automate tasks, gain insights from data, and deliver personalized experiences. However, as powerful as it is, using generative AI can pose risks, especially regarding data privacy and governance.

In this blog post, we will explore three alternative ways to safely adopt Generative AI in a corporate environment:

Housing a local Generative AI model;

Purchasing Microsoft Azure dedicated instances of OpenAI models;

Adopting AidaMask together with existing commercial models.

Housing local Generative AI models

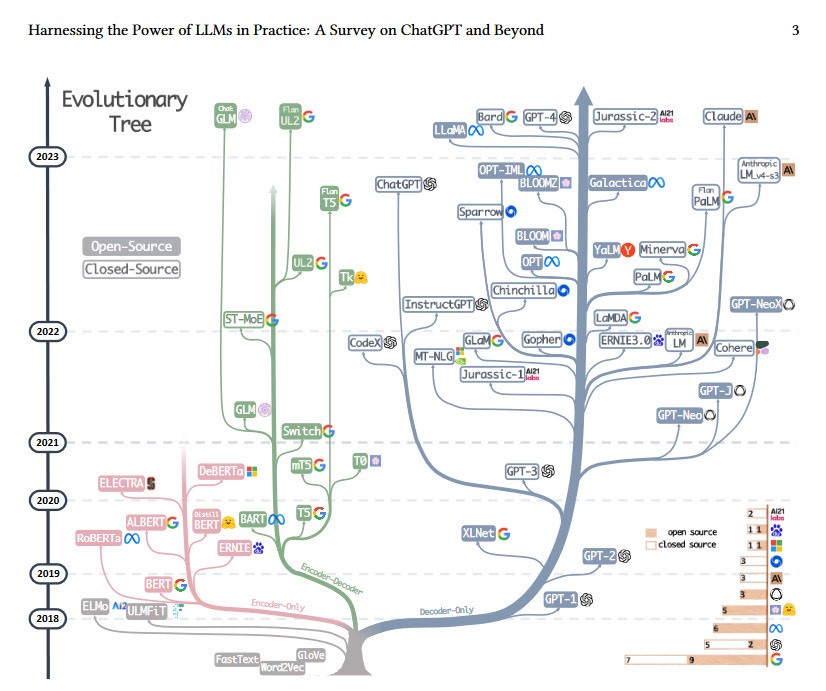

Commercial or open-source models are a great option for companies wanting to use generative AI. Models like BLOOM, GPT 3, GPT Neo, CodeGen, and T5 are popular choices.

Housing local Generative AI models: Use cases

Among several existing use cases:

Amazon has developed Amazon CodeWhisperer, an AI coding companion that helps developers build applications faster and more securely by providing whole line and full function code suggestions in their integrated development environment (IDE). Amazon has also launched Amazon Bedrock, a suite of generative AI development tools that allow customers to build generative AI applications using pre-trained foundation models.

Bloomberg has developed BloombergGPT, a generative AI model based on BLOOM and trained on financial data, which is integrated into the firm's terminal software. This model can replace company names with stock tickers, identify names in documents, write headlines, and determine how a headline reflects a company's financial outlook.

Kensho Technologies is another company that has built AI-powered solutions, such as Kensho Scribe, which transcribes expert calls with high accuracy, and Kensho NERD, which highlights entities in transcribed text to assist compliance teams in preparing transcripts for their platform. Kensho Technologies has also launched a Natural Language Processing (NLP) solution called Kensho Classify, which derives value from vast amounts of text and documents by making content more discoverable, enabling analysis of text, and smart search.

Tegus, a modern research platform for leading investors, has used Kensho's AI to reduce their administrative workload by 70% and improve their compliance funnel. They leverage Kensho Scribe to transcribe expert calls and Kensho NERD to highlight entities in the transcribed text, which helps their compliance team prepare transcripts for their platform. These companies have successfully implemented generative AI in-house, demonstrating the potential of this technology to drive innovation and improve business processes.

Housing local Generative AI models: Costs

Local implementation of generative AI typically determines high set-up and maintenance costs due to several, hardly avoidable factors:

Infrastructure and technology investments: Implementing generative AI requires significant investments in technology and infrastructure, such as powerful computing resources, storage, and networking capabilities. These investments are necessary to support the training, fine-tuning, and deployment of AI models.

Data management and governance: Local implementation of generative AI models often involves handling large volumes of data, which requires robust data architectures and effective data governance strategies. Ensuring data privacy, security, and compliance with regulations adds to the complexity and cost of managing data in-house.

Talent acquisition and retention: Building and maintaining generative AI models require skilled professionals with expertise in AI, machine learning, and data science. Hiring and retaining such talent can be challenging and expensive, especially in a competitive job market.

Model development and maintenance: Developing and fine-tuning generative AI models can be time-consuming and resource-intensive. Regular updates and improvements to the models are necessary to maintain their performance and relevance, which adds to the ongoing maintenance costs.

Housing local Generative AI models: Pros and Cons

Pros

Confidentiality vs. other companies by design: Provided that the implementation is local, the risk of information leakage outside the company perimeter is prevented by design.

Marginal costs: Given the on-premises implementation, there no pay-per-use fee, and the marginal cost equals to the computational costs of running the model.

Cons

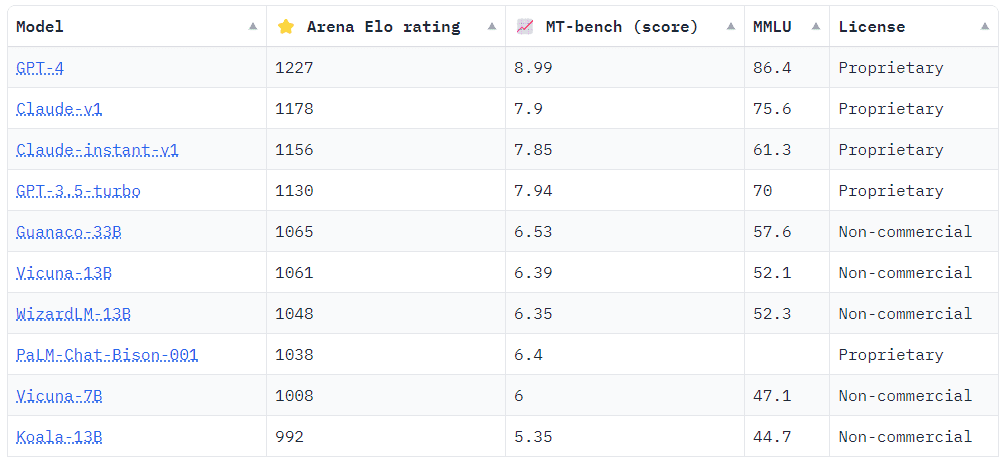

Limited model effectiveness: Currently, the best performing models in terms of ELO Rating are third-party commercial models not available for local implementations, such as GPT-4 (ELO 1274 as of June 2023), Claude-v1 (1224), GPT-3.5-turbo (1155) - see table below. Therefore, using a local model might provide sub-par effectiveness.

Low model moderation: Less capital-intensive models might benefit of less railing or moderation. As a results, they might be biased across content. This applies also to community-owned and operated models.

Set-up and maintenance costs: Although benefitting of lower marginal costs, Local implementation of generative AI typically determines high set-up and maintenance costs due to several factors. For example, it is estimated that Bloomberg AI implementation costs (BloombergGPT) accounted for a $1mn investment.

English-language models only. ELO rating as of July 11, 2023. Source: https://huggingface.co/spaces/lmsys/chatbot-arena-leaderboard

Utilizing Microsoft Azure dedicated instances of OpenAI models

Another option is to use dedicated instances on Microsoft Azure. Microsoft is reportedly developing a privacy-centric variant of the ChatGPT chatbot, aimed at large corporations like banks and health care providers who have high-stake concerns about data security and regulatory compliance, according to an article from The Information release on May 2023.

The new offering, expected to be unveiled later this year, promises to deploy ChatGPT on dedicated servers, distinct from the ones utilized by other corporations or individual users. This implies that sensitive data would not be incorporated into the training of ChatGPT's language model, effectively mitigating the risk of unintended data exposures - a scenario where a chatbot discloses sensitive information about a company's strategic plans to a competitor due to shared use of ChatGPT.

However, a caveat accompanies this elevated privacy: these bespoke versions of ChatGPT could potentially cost significantly more to operate and employ. For example, the report indicates that these private servers "could cost up to ten times more than the cost of using the regular version of ChatGPT".

Utilizing Microsoft Azure's dedicated instances of OpenAi models: Pros and Cons

Pros:

Confidentiality vs. other companies by design: Provided that the implementation is segregated, the risk of information leakage outside the company perimeter is prevented by design;

Scalability: Azure's vast cloud infrastructure makes it easy to scale up your operations as required.

Cons:

Cost: The cost of using Azure's services can add up quickly, particularly for larger operations, resulting in high unit costs and non-viable use cases;

Closed architecture: It has to be expected that the use case will be "ring fenced" to facilitate integration applications part of the Microsoft ecosystem (and only those);

Complexity: Setting up and managing dedicated instances can be complex and require technical expertise.

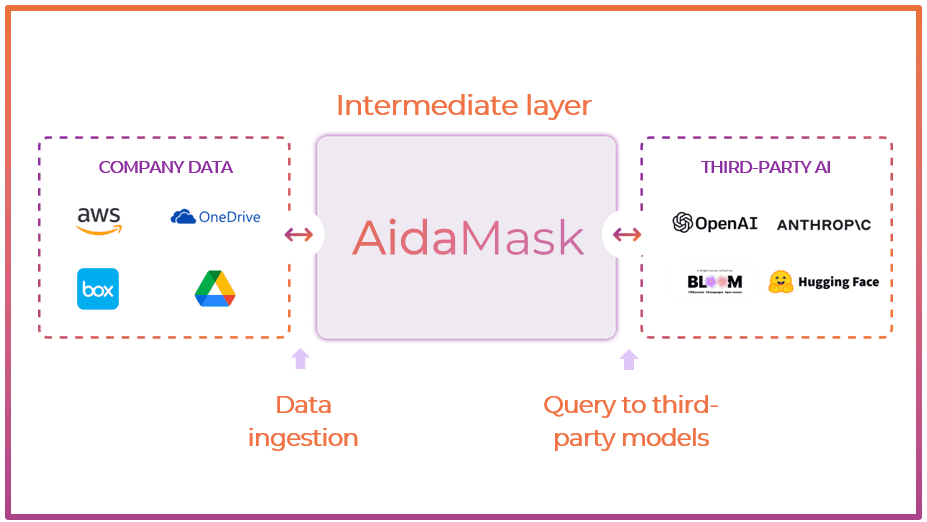

Adopting AidaMask together with existing commercial models

AidaMask is an innovative, AI-driven platform designed to facilitate secure and efficient integration of the best Generative AI models in business environments in a "zero-trust" logic.

Operating on an open architecture basis, AidaMask can connect to and utilize the best-of-breed AI models, allowing businesses to harness AI power tailored to their specific needs. This ensures that your organization gets the most relevant and high-performing AI solution, optimizing the decision-making process and improving operational efficiency.

At the cornerstone of AidaMask, its advanced data masking technology, which obfuscates sensitive information before it is processed by the AI. This ensures maximum confidentiality and compliance with data privacy regulations, providing peace of mind that sensitive information remains secure, even while unlocking the benefits of AI.

Lastly, AidaMask is fully interoperable: it employs an API-only integration approach, which means it can be easily incorporated into your existing workflows, avoiding any disruption.

Adopting AidaMask together with existing commercial models: Pros and Cons

Pros:

Confidentiality vs. other companies by design: Confidential data are masked before being shared with third-party LLM - so there is no risk of exposing internal data to external eyes;

Open architecture: AidaMask's engine is compatible with various generative AI models, making it an alluring option for businesses that require diverse AI functionalities. The open architecture also allows the best-of-breed AI models to be used depending on the specific need;

Pricing: It operates on a pay-as-you-go model, which means businesses only pay for the services they use. This makes AidaMask a cost-effective solution, especially for businesses that have fluctuating AI usage.

Cons:

Standardization: Given its current product phase, AidaMask currently will initially provide a "minimum required" set of functionalities across clients, with limited possible customization;

Early stage: As AidaMask is still in its early stage of market adoption, there are fewer case studies and benchmarks to reference. Businesses might be wary of this, as it often comes with a greater potential for bugs or unanticipated issues. However, it is worth noting that early adopters often get the advantage of shaping the product's evolution and capitalize this over a long term commercial relationship.

Conclusion

In the age of AI, ensuring safe use of generative models has become a necessity. Whether you choose to leverage open-source models, use dedicated instances on Azure, or adopt AidaMask, the key is to carefully consider the pros and cons of each method and choose the one that best fits your company's needs and budget. Always remember, the goal is not just to use AI, but to use it safely and responsibly.

Trusted by the Web Community

See what we written lately

Request an invite

Get a front row seat to the newest in identity and access.

Looking for Figma Version?